In this tutorial we will walk through the process of deploying a React application with routes in a Docker container using the NGINX base image.

1. Introduction

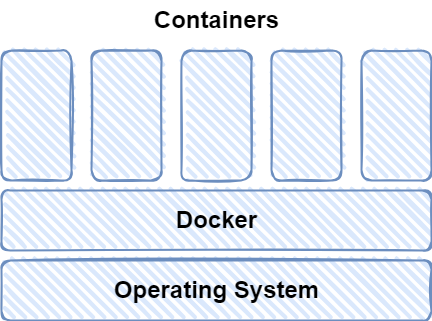

Docker is a popular platform for developers to use to create, share, and run applications in containers.

Containers are lightweight software packages that run independently on top of a Docker engine. They use the basic functions of the OS on which the Docker engine runs, hence they do not require a separate operating system to run.

As a result, they are lighter and smaller than existing technologies such as Virtual Machines. They run the same regardless of the underlying OS, thus you can get the same results whether you run the container on a Windows or Unix machine.

This approach transformed the infrastructure used to deploy, ship, and run software. If you’ve ever heard of Kubernetes, an open-source container orchestration system created as a result of this new containerization technology, you’ll understand the significance of Docker in modern development infrastructure.

In this tutorial we will use a React app with routes that we created in a previous blog post and deploy it using the nginx base image.

2. Create the Docker image

The app we are goind to deploy

If you want to follow along this tutorial, clone this repository and checkout to the commit, where the React routing application is already implemented:

$ git checkout df59395c8bThen install all dependencies:

$ npm installAnd then build the web files, that we are going to deploy:

$ npm run buildYou will notice an index.html and an index.js file inside the dist folder. These are the files that we need to deploy in a web server.

If you wish to see how the application looks like, you can do so by starting the DevServer:

$ npm startAnd visit http://localhost:8080/, you will see a blue header called “Home”.

Install Docker

If you haven’t already installed Docker, you can Download it from the official webpage.

You can verify docker is installed by running the following command:

$ docker --versionIf you run:

$ docker psYou should see a list of running containers or an empty list with the following header:

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMESIf you see a message like this:

error during connect: This error may indicate that the docker daemon is not running.This indicates that the Docker daemon is not active. If you are using Windows, you can open the “Docker Desktop” app, which will start the Docker daemon automatically.

If you use Docker frequently, you should configure Docker to start on boot.

Basic Docker commands

Docker has four main entities you need to remember:

- images

- containers

- volumes

- networks

In this tutorial, we will only discuss the first two because we will containerize a front end app that does not require volumes or networks. We’d use volumes to save large files or data, and networks to allow communication between containers, but in our example, we’ll only have one container and an image.

To introduce containers and images:

- Images are the fundamental building blocks of a container, defining all software dependencies, entry points, and so on. They serve as a blueprint for the construction of containers.

- Containers are the running versions of images.

We can easily distinguish between image and container if we think in terms of blueprint and implementation.

In order to view all running containers run:

$ docker ps

or

$ docker container lsIn order to view all images run:

$ docker image ls

or

$ docker imagesWe don’t have any images or containers right now, so both lists are empty. You can also view all containers (including those that have been stopped) by using:

$ docker ps -aWriting the Dockerfile

In a file called “Dockerfile,” we define all of the image dependencies and steps to create an image. A Dockerfile is a file that contains a set of commands that can be:

- FROM to refer to a base image from a registry, like Docker Hub

- RUN to run commands

- COPY to copy files

- And more, read here for a full reference

Usually we follow these steps when we want to create an image:

- Visit the Docker Hub and look for the base image we want to build on.

- Read the image documentation

- Refer to this base image when using the FROM command.

- Continue to write the commands you require.

In our example we will use the NGINX base image.

If you are interested you can have a look at the documentation of the base image.

In our example we want to fulfil the following requirements:

- Copy the static files to the NGINX public html directory.

- Because we will be deploying a React Routing app, configure the NGINX server to redirect all requests to index.html.

The NGINX configuration file will look like this:

nginx.conf

events {}

http {

server {

listen 80;

root /usr/share/nginx/html;

include /etc/nginx/mime.types;

location / {

try_files $uri $uri/ /index.html;

}

}

}All this config declares is:

- The port to serve the static files

- Where the root folder is.

- How to proxy the requests at the / location.

We can now start writing our Dockerfile:

Dockerfile

FROM nginx

COPY dist /usr/share/nginx/html

COPY nginx.conf /etc/nginx/nginx.confThe steps above state the following:

- Use the nginx base image

- Copy the folder with the web files into the NGINX public html folder

- Copy the NGINX configuration file into the appropriate place inside the container (most Unix configuration files are inside the /etc folder)

Now that we’ve defined both the NGINX configuration file and the Dockerfile, we can build the docker image with the following command:

$ docker build -t routing .This will output the steps that Docker executed:

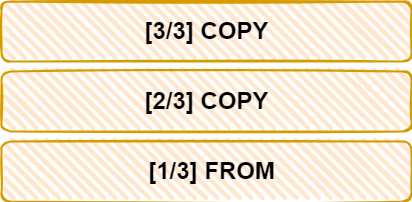

[+] Building 13.1s (9/9) FINISHED

... 0.0s

=> [1/3] FROM docker.io/library/nginx@sha256:86e53c4c16a6a276b204b0fd3a8143d86547c967dc8258b3d47c3a21bb68d3c6 8.9s

...

=> [2/3] COPY dist /usr/share/nginx/html 0.3s

=> [3/3] COPY nginx.conf /etc/nginx/nginx.conf 0.1s

=> exporting to image 0.1s

=> => exporting layers 0.1s

=> => writing image sha256:ef95755901dd6a4e863d2ed63e5818eed766382f052d146ee1ce25606d7cce62 0.0s

=> => naming to docker.io/library/routing 0.0sEach step defined in the Dockerfile, for example [2 / 3], is displayed near the brackets indicating the current and total steps. These steps are referred to as layers, and an image is made up of multiple layers, as shown in the image below:

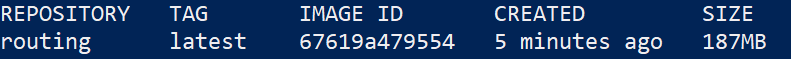

We can verify the image has been created, if we run:

$ docker imagesThen we will see a table like this:

In the next section we will run the container from this image.

3. Run the container

Run command

In order to run the image we have just created, we need to run the following command:

$ docker run --name routing-container -d -p 8080:80 routingThis command will:

- Create a container named routing-container

- Create the container in detached mode (-d), so you don’t need to keep the terminal open

- Use the internal port 80 of NGINX and map it to the external port 8080

If you run:

$ docker psYou will see the table listing our container:

If you visit http://localhost:8080/ you can see the app running.

Using compose

There is an easier way and also best practice to run the container with the docker compose command.

We don’t have to define the parameters in the command line every time we use Docker compose, as we did in the previous example when we defined the ports to map. Instead, all of the parameters and environment variables are defined in a YAML file called docker-compose.yml.

In our example this file looks like this:

docker-compose.yml

services:

routing:

image: routing-image

build:

context: .

dockerfile: Dockerfile

ports:

- "8080:80"You can stop the previews container and just run:

$ docker compose upThis command will:

- Create the image routing-image by reading the Dockerfile

- Run the image in a container and map the internal port 80 to 8080

This means that instead of running two commands and defining everything in the command line, we only need to run one command!

4. Conclusion

We learned what Docker is and what Docker containers and images do. Then, we containerized a React app using the NGINX base image. Finally, we learned about docker compose, which is a best practice for running and configuring multiple containers.